The Impact of AI Phobia on Tech Adoption and Innovation

The Impact of AI Phobia on Tech Adoption and Innovation in 2025

Key Takeaways

AI phobia in 2025 isn’t just hesitation—it’s a real psychological barrier that directly slows tech adoption and innovation. Understanding and addressing this fear through transparent communication, inclusive culture, and practical training can unlock AI’s full potential for your team and business.

- Recognize AI phobia as a twofold challenge: anticipatory anxiety and annihilation anxiety cause real employee resistance that lowers engagement and stalls adoption efforts.

- Prioritize clear, honest communication at every level to reduce fear and build trust around AI’s role and limitations.

- Involve employees early with inclusive pilot programs to turn skepticism into curiosity and co-create AI-driven workflows.

- Bridge the innovation gap by addressing AI fears head-on: slow adoption risks losing market share, revenue, and efficiency to more AI-ready competitors.

- Build a culture-first AI strategy by aligning adoption with transparent goals, ethical training, and participatory decision-making.

- Counteract media-driven fear with balanced narratives that combine fact-based education and real-world AI success stories.

- Invest in ongoing AI literacy programs and ethical governance to prepare your workforce for a decade of accelerated AI innovation.

- Focus on people and trust as the foundation of AI success: organizations that elevate transparency and accountability see adoption grow by up to 60%.

Overcoming AI phobia isn’t optional—it’s essential to transform fear into a launchpad for innovation and competitive advantage in today’s AI-driven landscape. Dive into the full article to learn actionable strategies and sector-specific insights that power successful AI adoption.

Introduction

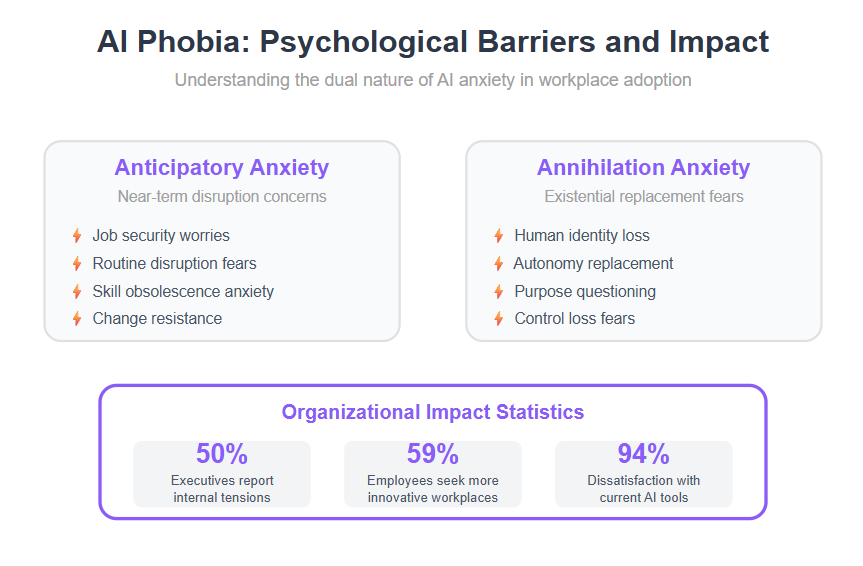

Imagine launching a powerful AI tool at your company, only to have half your team push back—worried it’s a sign their jobs are next. This isn’t rare. In 2025, nearly 50% of executives report internal tensions fueled by AI-related fears, turning promise into paralysis.

AI phobia isn’t just hesitation; it’s a mix of anxiety and skepticism that quietly stifles innovation and slows tech adoption. For startups, SMBs, and enterprises alike, ignoring this fear means missing out on faster delivery, smarter workflows, and new revenue streams.

If you’re looking to leverage AI but sense resistance beneath the surface, understanding these psychological barriers is crucial. You’ll discover:

- The root causes behind AI anxiety and how it affects your team

- Why leadership styles and company culture either ease or amplify fears

- Practical strategies to transform resistance into engagement and momentum

Recent empirical research supports these claims, showing that evidence-based findings are essential for understanding how AI phobia impacts tech adoption and employee well-being.

Analysis revealed key psychological barriers and their effects on organizations.

Navigating AI phobia effectively can turn internal friction into a catalyst for faster adoption and better results.

Next, we’ll unpack the core psychological dynamics shaping this fear—and why addressing them first is essential before pushing the “go AI” button.

Understanding AI Phobia: Psychological Roots and Implications for Tech Adoption

AI phobia isn’t just hesitation—it’s a deep-seated fear that shows up in workplaces and society as skepticism, anxiety, or outright resistance toward AI technologies.

This fear breaks down into two main psychological dimensions, both of which significantly influence human behavior:

- Anticipatory anxiety: Worry about how AI might disrupt jobs or routines in the near future.

- Annihilation anxiety: More existential—the fear that AI could replace human identity or autonomy altogether.

AI phobia can have negative effects on employee morale, psychological safety, and overall organizational outcomes, making it crucial for leaders to address these concerns proactively.

How These Fears Shape Employee Experience

When these anxieties take hold, employees often react with:

- Reduced engagement with new tools

- Lower job satisfaction

- Skepticism that slows adoption efforts

- Concerns over job security due to fears that AI-driven automation may threaten employment stability

This isn’t just resistance for resistance’s sake. It impacts morale and slows companies from reaping AI’s real benefits.

AI Phobia’s Role Beyond the Individual

These fears ripple outward, fueling broader resistance against adopting any new tech.

AI phobia can significantly impact organizational dynamics, disrupting workplace relationships, social interactions, and internal processes as employees and teams struggle to adapt to technological change.

For example, nearly 50% of executives in 2025 report internal tensions linked to AI anxiety, while 94% express dissatisfaction with current AI tools, according to recent AI is “tearing apart” companies, survey finds research. This disconnect highlights how organizational culture and leadership communication play a big part in either amplifying or easing this anxiety.

Visualize This

Picture a startup rolling out a new AI-driven dashboard. Early excitement fades because half the team is wary it’s a test run for layoffs. Trust breaks down. Productivity stalls.

Why This Matters Now

Understanding these psychological roots is essential before pushing AI adoption harder.

Technological change, such as the rapid introduction of AI, can intensify AI phobia and resistance among employees by increasing uncertainty and disrupting established work routines.

Recent studies spotlight that effective innovation depends on acknowledging and addressing AI phobia first. Without this step, tech initiatives hit walls—no matter how cutting-edge the software.

Quick Takeaways for Your Team

- Recognize anticipatory and annihilation anxiety as real barriers, not just excuses.

- Prioritize clear, honest communication to reduce fear.

- Invest in training that empowers employees rather than overwhelms them.

AI phobia in 2025 isn’t a roadblock; it’s a signpost pointing to where empathy and strategy must lead next in your AI journey.

Understanding AI Systems and Their Limitations

Artificial intelligence (AI) systems are now woven into the fabric of daily lives and organizational operations, powering everything from customer service chatbots to advanced data analysis tools. As AI adoption accelerates, it’s easy to focus on the promise of artificial intelligence AI—faster workflows, smarter decisions, and new business models. But to truly address AI phobia and foster responsible innovation, it’s essential to understand both the capabilities and the boundaries of these technologies.

What AI Can—and Can’t—Do in 2025

AI systems in 2025 excel at processing massive datasets, identifying patterns, and generating valuable insights that would be impossible for humans to uncover at scale. They automate repetitive tasks, streamline customer interactions, and support decision making with data-driven recommendations. Generative AI, in particular, can create content, simulate scenarios, and even assist in creative brainstorming.

However, AI systems are not a substitute for human capabilities. They lack empathy, critical thinking, and the nuanced understanding that comes from lived experience. AI models are only as good as the data they’re trained on—if that data is biased or incomplete, the AI’s outputs can be flawed or unfair. Even the most advanced generative AI can produce misleading or inaccurate information, which can inadvertently fuel AI phobia when users encounter errors or unexpected results.

Recognizing these strengths and weaknesses is key to leveraging AI as a powerful tool while remaining aware of its limitations.

Common Misconceptions Fueling AI Phobia

AI phobia often grows from misconceptions about what AI systems and AI models can actually do. One widespread fear is that AI will completely replace human workers, leading to mass unemployment. In reality, while AI can automate certain tasks, it is most effective when it augments human capabilities—freeing up people to focus on higher-value work that requires creativity, emotional intelligence, and complex problem-solving.

Another misconception is that AI systems are infallible, making perfect decisions without error. In truth, AI models can make mistakes, especially when faced with new or ambiguous situations. Without human intervention and oversight, these errors can have unintended consequences. That’s why explainable AI is gaining traction: by making AI’s decision making processes more transparent, organizations can build trust and ensure that human workers remain central to critical decisions.

Understanding these realities helps organizations and individuals view AI as a collaborative partner, not a mysterious black box.

How Limitations Shape Adoption Fears

The limitations of AI systems—such as the potential for bias, error, and lack of transparency—are at the heart of many adoption fears. Concerns about job displacement, uncertainty about how AI works, and anxiety over losing control all contribute to AI phobia. These fears can slow the AI adoption process and prevent organizations from realizing the full benefits of technological progress.

The solution lies in acknowledging these limitations openly. By investing in AI-related skills, promoting transparency in AI decision making, and ensuring robust human oversight, organizations can build confidence in new AI systems. Responsible AI development means integrating human judgment at every stage, so that AI serves as a tool for empowerment rather than a source of anxiety. This approach not only addresses fears but also paves the way for sustainable, people-centered innovation.

Organizational Impact: Employee Resistance and Leadership Challenges

AI phobia fuels internal tensions as companies race to adopt AI without fully addressing employee fears, especially concerns among human employees about job loss and displacement. This resistance isn’t just anxiety—it turns into real conflict that slows progress and frays teams.

Organizations face significant challenges when adopting AI, particularly in managing the balance between human and AI collaboration to ensure both productivity and trust.

Executive dissatisfaction and employee concerns collide

Recent data shows 94% of C-suite leaders are unhappy with current AI tools. At the same time, employees worry about job displacement and feel uncertain about the value AI brings. Human resources play a critical role in managing these employee concerns and supporting the adoption of AI within organizational management systems. This creates a two-way friction:

- Leaders push for rapid AI integration to stay competitive

- Employees fear losing roles or being replaced by automation

This mismatch drives low morale, decreased engagement, and higher turnover—59% of executives report team members looking for more innovation-driven workplaces.

Culture sets the stage for AI acceptance or rejection

Whether AI phobia explodes or eases depends heavily on organizational culture. Companies that foster open dialogue, encourage transparency, and include employees in AI planning see fewer barriers. In contrast:

- Top-down AI rollouts without employee input amplify fear

- Lack of clear purpose behind AI initiatives breeds suspicion and resistance

Redefining human roles in the context of AI adoption is crucial, as it helps employees see how their work will be augmented rather than replaced, reducing fear and resistance.

Aligning AI adoption with well-defined goals and inclusive change management keeps teams informed and involved, turning anxiety into curiosity and momentum.

Practical steps to ease AI tensions

To stem internal friction and build confidence:

Communicate AI goals clearly and honestly at every level

Provide training that addresses “how” AI impacts jobs, not just “what” AI is

Involve employees in pilot programs to co-create AI workflows

Reinforce accountability and trust—no hiding AI decision rationales

Picture a company hosting AI demo days where staff can test tools and ask questions in real time. This hands-on exposure humanizes AI and reduces unknowns. Such initiatives are also a practical way to foster human ai collaboration, helping employees and AI systems work together more effectively within organizational workflows.

“AI phobia isn’t just a tech problem—it’s a people problem.”

“Leadership and employee alignment is the secret sauce for smooth AI adoption.”

“Involving your team early is the best antidote to AI fear.”

Internal resistance caused by AI phobia manifests as tangible leadership challenges and talent risks. Tackling these requires a culture-first mentality combined with crystal-clear communication and shared ownership of AI’s role. Without it, teams fracture, innovation stalls, and adoption lags.

AI Development and Governance: Building Responsible Adoption

As organizations race to adopt AI technologies, the need for responsible AI development and governance has never been greater. It’s not enough to simply deploy new AI systems—leaders must ensure that these tools are developed, implemented, and managed in ways that align with ethical standards and societal values. This is especially important in an era where AI phobia can undermine trust and stall progress.

Why Governance Matters in the Age of AI Phobia

Effective governance provides the foundation for trustworthy AI adoption. It establishes clear ethical guidelines for how AI systems are built and used, ensuring that human capabilities remain at the center of technological progress. Governance frameworks promote transparency, requiring that AI models are explainable and that their decision making processes can be understood and scrutinized by human oversight bodies.

Strong governance also means having mechanisms in place to address concerns and unintended consequences—whether that’s job loss, bias, or safety risks in the workplace. By setting standards for responsible AI development and encouraging international cooperation, organizations can help ensure that AI serves the greater good, driving economic growth while safeguarding human interests.

Ultimately, prioritizing governance is about creating a delicate balance: harnessing AI’s potential for innovation and efficiency, while minimizing risks and building public trust. When organizations commit to ethical guidelines and transparent practices, they not only address the root causes of AI phobia but also lay the groundwork for sustainable, inclusive adoption of AI technologies.

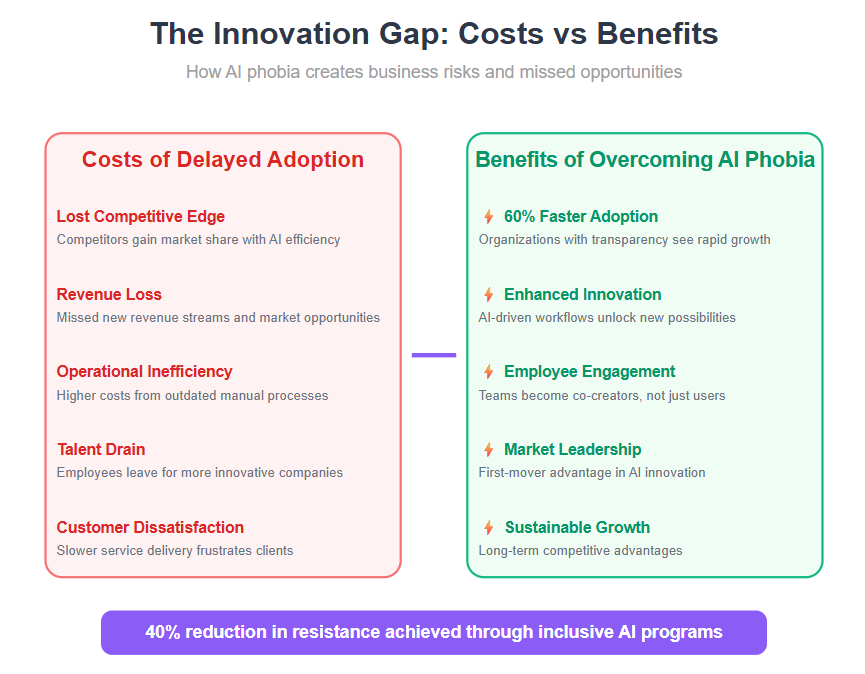

The Innovation Gap: How AI Phobia Slows Technology Adoption and Progress

Driven by technological advancements and technological innovation, many industries are experiencing rapid change, yet still struggle to integrate these tools effectively. Sectors like healthcare, education, and finance show a notable lag between innovation and real-world adoption.

AI's potential to revolutionize these industries is significant, and the promise AI holds for transforming workflows and creating new opportunities can only be realized if organizations address adoption barriers.

Why the gap matters

This slow uptake isn’t just a minor hiccup—it’s tied to what experts call the “innovation fallacy.” This idea means that the economic benefits of AI appear only when multiple sectors adopt it broadly and simultaneously. Without widespread use, AI’s potential remains locked, limiting market growth and competitive edge.

Consequences of delayed AI adoption

The risks of dragging your feet on AI include:

- Falling behind competitors who leverage AI for efficiency

- Missing new revenue streams created by AI-driven services

- Increased costs due to outdated manual processes

- Slowed product development timelines that frustrate customers

Failing to adopt AI in a timely manner can also lead to adverse outcomes, such as missed opportunities, increased operational risks, and negative impacts on employee well-being.

Consider a healthcare provider hesitant to use AI diagnostics. They may delay life-saving tools, pushing patients to facilities with newer tech. Or a bank avoiding AI for fraud detection risks heightened security breaches and customer loss.

Real-world examples highlight the stakes

- The finance sector has lagged behind despite AI’s proven ability to enhance risk assessment and automate compliance.

- Educational institutions are still grappling with incorporating AI tutors, missing chances to personalize learning at scale.

- Hospitals unwilling to embrace AI-assisted imaging tools are slower in diagnosis, affecting outcomes.

- Manufacturers slow to integrate AI into manufacturing processes face inefficiencies in automation, inspection, and process control, resulting in lost opportunities.

These holdbacks put pressure on leadership to move beyond fear and fully commit to AI adoption.

Immediate takeaways to accelerate progress:

Recognize that AI fears create a costly innovation gap that impacts growth.

Drive cross-department collaboration to create shared AI goals and lower resistance.

Explore pilot AI projects with clear metrics to showcase quick wins and build trust.

"Fear of AI doesn’t just stall projects—it slows entire industries down."

Picture the difference between watching your competitor launch an AI-enhanced product months ahead versus your team stuck debating “what if.” That lost time translates into missed markets and revenue. Closing the innovation gap starts by addressing AI phobia head-on with inclusive, data-driven strategies.

For a deeper dive, check out our sub-page: How AI Phobia Slows Down Cutting-Edge Innovation Worldwide—where we break down sector-specific impacts and solutions.

The key takeaway here is simple: bridging the innovation gap caused by AI phobia unlocks real competitive advantage and fuels market growth across industries. Don’t let fear be your bottleneck.

Strategic Approaches to Overcoming AI Phobia in Organizations

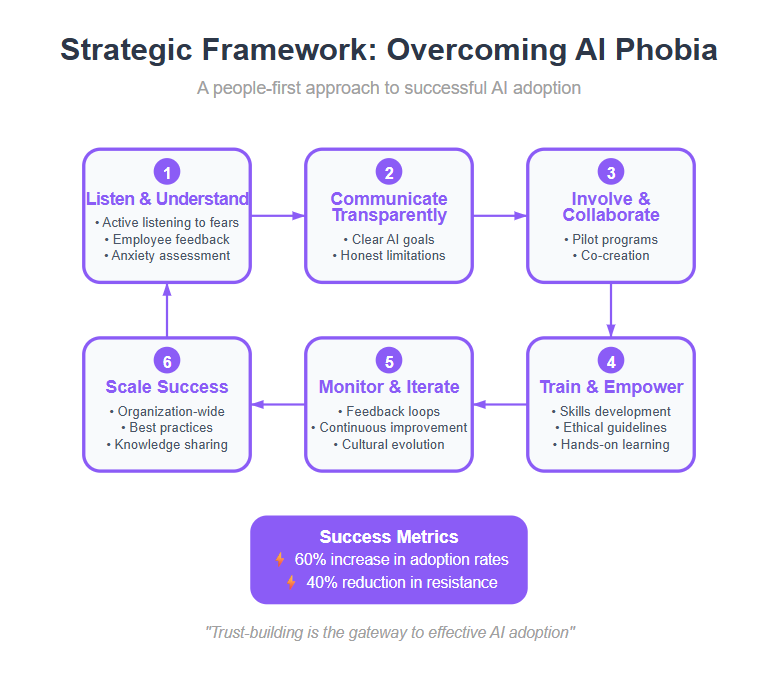

Putting people first is the single most effective strategy to ease AI phobia before rolling out new technologies. Addressing human concerns head-on creates a foundation of trust and openness.

To successfully integrate AI, organizations must plan carefully, considering both technological and human factors to ensure alignment with their goals and to support employee well-being.

Clearly defining AI's role within the organization is essential to build trust and reduce fear, ensuring that employees understand how AI will complement their work and contribute to strategic objectives.

Building Trust and Transparency

Successful AI adoption hinges on clear communication and transparent risk assessment. Employees need to understand not just the what, but the why and how behind AI tools.

Key strategies include:

- Listening actively to employee fears and feedback

- Sharing realistic goals and expected impacts

- Offering detailed info about data privacy and algorithmic fairness

- Enhancing transparency in AI models to build trust

This approach shifts AI from an unknown threat to a shared opportunity.

Explainable AI techniques, such as LIME and SHAP, can shed light on AI decision-making processes, further enhancing transparency and trust in these systems.

Elevating Accountability and Participation

Fostering a participatory culture where staff are involved in AI decision-making eases anxiety and builds ownership.

Organizations that empower teams with:

- Collaborative AI pilot programs

- Open forums for questions and idea exchange

- Clear accountability structures around AI outcomes

have seen measurable improvements in employee engagement.

Training and Ethical Use as Pillars

Comprehensive training programs tailored to skill levels demystify AI and boost confidence. Training should cover:

- Practical AI tool usage linked to daily tasks

- Ethical guidelines following frameworks like OECD principles

- Clear boundaries on AI’s role to avoid unrealistic expectations

Emphasizing responsible development in AI training and deployment ensures governance, transparency, fairness, and safety, supporting ethical and sustainable progress.

For instance, a US startup recently cut employee resistance by 40% after launching hands-on AI skill workshops paired with ethics training.

Real-World Success Stories

One LATAM enterprise overcame AI phobia by pairing transparent communication with employee-led AI committees, showing how AI helps employees by automating tasks and reducing fear, which boosted adoption rates by 60% in under a year.

Another SMB integrated a “people-first” rollout strategy that prioritized transparent updates and ongoing feedback loops, leading to higher morale and faster ROI.

Takeaways You Can Use Today

Start with honest conversations about AI fears in your team

Create small, inclusive pilot projects to build trust gradually

Invest in tailored training that connects AI to real-world employee benefits

“Trust-building is the gateway drug to effective AI adoption.”

“People resist what they don’t understand; transparency dissolves that barrier.”

“Involving employees transforms AI from a threat into a toolkit for innovation.”

By focusing on human-centered strategies and ethical frameworks, your organization can turn AI phobia into a launchpad for accelerated adoption and meaningful innovation.

Check out our sub-pages on 5 Proven Strategies to Overcome AI Phobia in Tech Adoption and Why Inclusive AI Education Is Revolutionizing Tech Adoption for practical, step-by-step guidance.

Media Influence and Public Perception: Shaping the Narrative Around AI Fear

Media coverage plays a huge role in amplifying AI phobia today. Sensational headlines and fear-driven stories grab attention but often distort the reality of AI’s impact.

Adopting the mindset of viewing AI as a collaborative partner, rather than a threat, can help shift public perception and highlight the positive ways AI can enhance human work.

Fear-Based Narratives Fuel Uncertainty

Popular media often leans on:

- Misinformation about AI’s abilities and risks

- Sensationalism that paints AI as a job killer or existential threat

- Overhyped dystopian scenarios with little relation to current AI tech

These narratives shape how employees and the public view AI, increasing anticipatory anxiety and reducing trust in new tools.

Responsibility to Promote Balanced Perspectives

Organizations, media, and AI advocates share a responsibility to:

- Provide fact-based, transparent information

- Highlight AI’s benefits alongside its risks

- Engage audiences with real-world examples instead of fear-driven hype

This balanced approach helps counteract negative perceptions that stall adoption.

Proven Strategies to Counteract AI Fear

To shift public perception, here’s what works:

Transparent Communication — Share honest updates about AI capabilities and limitations.

Education Initiatives — Train employees and stakeholders using clear, jargon-free content.

Stakeholder Engagement — Involve communities and workers in discussions about AI implementation.

These tactics build trust and lessen resistance born from misunderstanding.

Picture this:

Imagine a company hosting live Q&A sessions where experts dismantle AI myths, answer tough questions, and demo real AI use cases. This openness can turn skeptics into champions overnight.

Key insights to share:

- "Fear thrives in the shadows of misinformation—shine a light with transparency to dispel AI phobia."

- "Public trust isn’t given, it’s earned through honest dialogue and education."

- "Balanced narratives boost adoption by replacing anxiety with actionable understanding."

Tackling media-driven AI fear head-on is essential to unlocking the full potential of AI innovation. When people receive clear, honest information, they move from suspicion to curiosity—and that’s where innovation really takes off.

Learn more in Encouraging AI uptake: people first, tech second.

Future Outlook: Transforming AI Phobia into Innovation Momentum by 2030

The next five years will be critical in shifting AI phobia into a catalyst for innovation. Overcoming fear around AI is not just a cultural win—it’s a business imperative that could unlock trillions in global economic value. Ensuring robust AI algorithms and transparency in the underlying ai model will be essential for building long-term trust in AI technologies.

As organizations move forward, the design of the ai system and effective data collection processes will play a crucial role in ensuring ethical and effective deployment of AI solutions.

Looking ahead, it will be important to achieve statistically significant results when evaluating the impact of AI adoption strategies to demonstrate real, measurable benefits.

Emerging Trends Driving Trust and Adoption

We’re already seeing powerful movements that promise to ease AI anxiety:

- Trust-building initiatives emphasizing transparency and explainability

- New regulatory frameworks aimed at ethical, accountable AI use (OECD principles gaining traction)

- Rapid growth in inclusive AI education programs designed for diverse skill levels

Picture a workforce where employees don’t just tolerate AI but actively participate in its deployment.

Workforce and Organizational Shifts Ahead

Expect significant changes in how people and companies relate to AI by 2030:

- Greater organizational readiness as leaders prioritize culture and psychological safety

- A workforce that embraces AI as a tool, not a threat, promoting cross-industry collaboration

- Employee retention improving due to transparent communication and involvement

Imagine startups and SMBs crafting AI-powered products with buy-in from every team member, reducing friction and accelerating delivery.

Unlocking New Markets and Solving Complex Challenges

Once fear barriers fall, AI’s potential will explode:

- Businesses accessing blue-ocean markets through AI-driven innovation

AI's ability to transform business processes will drive unprecedented innovation in these new markets. - Tackling wicked problems like climate change, healthcare access, and personalized education

- Startups and enterprises alike will innovate faster and smarter with AI as a true partner

Human creativity remains essential in leveraging AI for breakthrough solutions that set businesses apart.

Think of AI as the creative spark behind the next wave of breakthrough products poised to reshape industries.

Preparing for the AI-Driven Decade

Startups, SMBs, and enterprises should:

Invest in ongoing AI literacy and training programs

Commit to transparent, ethical AI governance

Foster a culture that views AI as augmentative, not adversarial

As one early adopter put it, "The future belongs to those who stop fearing AI and start shaping it."

By focusing first on people, trust, and inclusive engagement, organizations will transform AI apprehension into unstoppable innovation energy.

The key takeaway: AI phobia is temporary—strategic action today lays the groundwork for thriving in the AI-powered world of tomorrow.

Learn about adoption disparities in In 2025, AI Adoption Will Continue to Lag Innovation - Mozilla Foundation.

Conclusion

Embracing AI means more than adopting new tools—it’s about transforming fear into fuel for innovation. When you address AI phobia head-on with empathy and clear communication, you unlock your team’s potential to move faster, collaborate better, and create breakthrough solutions.

Turning skepticism into trust is the gateway to not just smoother adoption, but sustained competitive advantage. Your organization’s culture and leadership mindset set the tone for how effectively AI can become a genuine partner in growth.

Keep these powerful actions top of mind:

- Listen openly and honestly to employee fears around AI. Validating concerns builds trust.

- Create small, inclusive pilot projects that empower your teams to experiment and learn.

- Invest in tailored training that connects AI tools directly to day-to-day workflows and benefits.

- Maintain transparent communication about AI goals, risks, and ethical use to clear confusion and build confidence.

Right now, you can spark momentum by starting conversations and launching pilots that integrate your people into the AI journey. The fastest route to overcoming AI phobia is through involvement, clarity, and action—make those your priorities today.

Remember, “The future belongs to those who stop fearing AI and start shaping it.”

By shifting from resistance to curiosity, you don’t just keep pace with technology—you lead the wave of innovation shaping the next decade. The choice to confront AI phobia isn’t just strategic—it’s transformational. The question is: will you step into that future?